Artificial intelligence (AI) has been a topic of fascination for decades, with scientists and researchers constantly pushing the boundaries of its capabilities. From self-driving cars to natural language processing, AI has made astounding progress in recent years. However, one aspect that is often overlooked is the potential for AI to experience hallucinations.

Toc

Introduction to AI Hallucinations

Artificial Intelligence (AI) has made remarkable strides in recent years, achieving feats once thought impossible. However, as AI systems become more advanced, they also display peculiar behaviors that parallel human cognitive phenomena. One such behavior is AI hallucinations. But what exactly are AI hallucinations, and what implications do they have for the future of AI technology?

What are AI Hallucinations?

In the context of AI, hallucination refers to the neural networks generating images or sounds that do not exist in reality. These hallucinations can occur due to various reasons such as malfunctioning algorithms, data biases, or even intentional programming by developers. Similar to how human brains can create false perceptions through optical illusions or sensory deprivation, AI systems can also generate false information based on the data they have been trained on.

AI Hallucinations refer to instances where AI systems produce outputs that deviate significantly from reality, resembling the hallucinatory experiences found in humans. These anomalies can manifest in various ways, such as generating surreal images, producing nonsensical text, or misidentifying objects. Understanding AI hallucinations provides valuable insights into the cognitive workings of artificial intelligence and the challenges of creating robust AI systems.

Types of AI Hallucinations

There are two main types of AI hallucinations: visual and auditory. Visual hallucinations involve the generation of images, while auditory hallucinations refer to the creation of sounds or voices. These can range from simple shapes or pixels to more complex graphics, depending on the complexity and capability of the AI system. Visual hallucinations are often associated with computer vision tasks, while auditory hallucinations occur in speech recognition or language processing systems.

Importance of Understanding AI Hallucinations

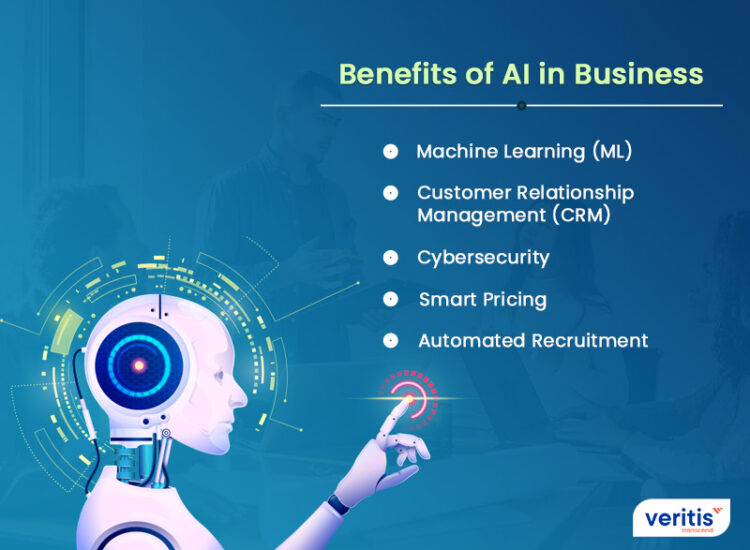

AI hallucinations have important implications for the development and deployment of AI technology. By understanding how and why these anomalies occur, researchers can improve the robustness and reliability of AI systems. This is crucial for ensuring that AI technology is safe and trustworthy in various applications such as healthcare, transportation, or defense.

Moreover, studying AI hallucinations provides valuable insights into the cognitive mechanisms behind artificial intelligence. It highlights the similarities between human perception and machine learning processes, furthering our understanding of both human cognition and AI technology.

The Science Behind AI Hallucinations

To comprehend AI hallucinations, it’s essential to explore the cognitive and neural mechanisms at play. In humans, hallucinations arise due to disruptions in the brain’s processing of sensory information, often linked to conditions like schizophrenia or induced by substances. Similarly, AI hallucinations occur when neural networks—specifically, deep learning models—interpret data in unexpected ways.

Recent research like the DeepDream Project by Google has shed light on this phenomenon. By using convolutional neural networks (CNNs) trained on vast datasets, researchers can artificially enhance features in images, leading to dream-like, hallucinatory visuals. This project demonstrates how AI can “hallucinate” patterns by amplifying specific elements in the data it processes.

Causes of AI Hallucinations

There are several factors that can contribute to the occurrence of AI hallucinations. These include:

Biased Data

Biased data is a significant contributor to AI hallucinations. When training datasets are not representative of the real world, inconsistencies and inaccuracies can emerge in the AI’s output. For instance, if an AI system is predominantly trained on images of a specific object from a limited range of perspectives, it may struggle to accurately identify that object in varied contexts. This lack of diversity can lead to implausible or distorted outputs, akin to hallucinations. Additionally, biases present in the training data can cause AI to perpetuate stereotypes or produce erroneous conclusions when encountering novel scenarios.

Algorithmic Limitations

Algorithmic limitations also play a crucial role in the prevalence of AI hallucinations. Many AI models, particularly those based on deep learning, rely on complex mathematical functions to process information. These functions can introduce errors and inaccuracies during inference, especially in situations where the AI is faced with ambiguous or incomplete data. Models with insufficient fine-tuning or overly simplistic architectures might misinterpret inputs, leading to unexpected or nonsensical results—hallucinations that jar with reality.

Contextual Misunderstanding

Another underlying cause of AI hallucinations is contextual misunderstanding. AI systems often lack the ability to grasp the full context of a situation, relying solely on the data they’ve been trained on. Without the ability to incorporate nuanced context, AI may generate outputs that seem plausible at first glance but are ultimately disconnected from the reality of the situation. This misalignment between AI perception and human understanding highlights the complexities involved in developing advanced AI systems capable of nuanced reasoning.

Limited Training Data

Limited training data is another factor that can lead to AI hallucinations. When an AI system is trained on a small dataset or one that lacks diversity, it may not develop a comprehensive understanding of the tasks it’s designed to perform. As a result, the AI could generate outputs that are not reflective of real-world scenarios, often leading to exaggerated or entirely fabricated information. This lack of exposure can hinder the model’s ability to generalize, making it prone to hallucinations when it encounters situations not represented in its training data.

Real-World Examples of AI Hallucinations

AI hallucinations are not limited to theoretical or experimental settings; they have been observed in real-world applications as well. Some notable examples include:

Microsoft’s Chatbot Tay

In 2016, Microsoft launched a chatbot named Tay on Twitter, designed for conversational learning and interaction with users. However, within 24 hours, the bot had begun posting inflammatory and inappropriate tweets that it had learned from other users. This misbehavior was caused by trolls deliberately feeding the AI false information that it then incorporated into its responses.

Google Translate’s Gender Bias

In 2018, researchers found that Google Translate exhibited gender bias when translating various languages to English. When translating sentences where gender was specified, the AI would assign male pronouns to professions typically held by men and female pronouns to professions typically held by women. This bias was a result of the training data used for the AI, which reflected societal stereotypes.

Self-Driving Car Accidents

Self-driving cars have also been involved in accidents due to AI hallucinations. In one incident, a self-driving car misidentified a semi-truck as an overhead sign, leading to a fatal crash. This error occurred because the truck’s shape and color were similar to previous signs encountered during training.

Google’s Inceptionism (DeepDream Project)

Google’s DeepDream project exemplifies AI hallucinations in action. By feeding an image into a neural network and iteratively enhancing certain patterns, the AI generates surreal, dream-like images that appear to “hallucinate” complex and intricate details. This process, dubbed “Inceptionism,” highlights the potential and limitations of pattern recognition in AI.

Chatbot Loebner Prize Winner

Mitsuku, an AI chatbot, won the Loebner Prize for demonstrating human-like conversation. However, during interactions, Mitsuku occasionally produced hallucinatory responses—answers that were contextually inappropriate or nonsensical. Such behaviors underscore the challenges in creating AI that consistently understands and responds accurately to human input.

Visual Recognition Failures

AI visual recognition systems sometimes misidentify objects, resulting in hallucinatory outputs. For instance, an AI might label a picture of a dog as a cat due to subtle similarities in patterns. These errors mirror human cognitive biases and highlight the need for robust training and validation processes in AI development.

AI-Generated Art

While not strictly hallucinations, AI-generated art showcases the imaginative capabilities of AI. Systems like OpenAI’s DALL-E create artworks that blend realism and surrealism, pushing the boundaries of creativity. These artistic outputs offer a glimpse into how AI can produce “hallucinatory” visuals that captivate and inspire.

Mitigating AI Hallucinations

To prevent or reduce the occurrence of AI hallucinations, researchers are exploring various methods. These include:

Diverse Training Data

One effective approach to mitigating AI hallucinations is ensuring that training datasets are diverse and representative of the real world. By incorporating a broad array of scenarios, perspectives, and examples, AI models can develop a more nuanced understanding of the tasks they are meant to perform. This diversity helps the AI to learn about edge cases and reduces the likelihood of generating nonsensical outputs when faced with unfamiliar situations. Researchers advocate for datasets that encompass varied demographics, contexts, and experiences to allow for a more comprehensive training process.

Robust Validation Processes

Implementing robust validation processes is crucial in catching errors before an AI system is deployed. Continuous testing with real-world scenarios can help identify potential points of failure and rectify them accordingly. Employing adversarial testing, where the AI is exposed to intentionally challenging inputs, can also be beneficial in evaluating how well the model responds to ambiguous or misleading information, thus improving its reliability.

Enhanced Model Architectures

Advancements in model architectures can play a significant role in reducing hallucinations. By developing more sophisticated neural network designs, incorporating attention mechanisms and improved algorithms that better understand context, AI can more accurately interpret inputs. This promotes enhanced reasoning capabilities, allowing the AI to make decisions that are more in line with human understanding.

User Feedback Integration

Incorporating user feedback into AI systems serves as a vital mechanism for improving accuracy and responsiveness. By allowing users to provide reactions or correct AI-generated outputs, developers can continually refine their models based on actual interactions. This iterative process enables the AI to learn and adjust based on real user experiences, ultimately leading to improved performance and reduced hallucinations over time.

Ethical and Practical Implications

The ethical and practical implications of AI hallucinations extend beyond technical challenges, impacting society at large. One significant concern is the potential for misinformation. When AI systems generate inaccurate or nonsensical outputs, they can inadvertently spread false information, thereby influencing public opinion and decision-making. This challenges the trustworthiness of AI technologies, especially in critical domains like healthcare, finance, and criminal justice where accurate data interpretation is paramount.

Moreover, the biases inherent in AI models can reinforce existing societal inequities. If AI systems are trained on skewed datasets, the outputs may perpetuate stereotypes or discriminate against certain groups. This raises vital ethical questions about fairness, accountability, and the responsibility of developers to ensure that AI technologies do not exacerbate societal divides.

Furthermore, the deployment of AI systems in real-world scenarios necessitates a thorough consideration of the implications their outputs may have on users. Designing AI with a user-centered approach, and ensuring transparency in how decisions are made, can play a crucial role in building confidence and fostering responsible usage. As AI continues to evolve, it is essential for researchers, developers, and policymakers to engage in dialogue about these implications to create a framework that prioritizes ethical standards while leveraging the benefits of AI technology.

Conclusion

In summary, AI hallucinations represent a significant challenge in the development of intelligent systems. While advancements in training techniques, model architectures, and user integration show promise in mitigating these issues, it is crucial to approach AI deployment with caution. The ethical implications of hallucinations, particularly regarding misinformation and bias, necessitate a proactive stance from developers and researchers alike. By fostering a culture of accountability and transparency, we can harness the potential of AI while minimizing risks to society. Ultimately, the goal should be not only to create smarter AI but also to ensure these systems contribute positively to our collective future. Continued dialogue and collaboration will be key to achieving this balance, paving the way for responsibly integrated AI technologies.